“Black girls.” “Latina girls.” “Asian girls.” Back in 2009, when Safiya Noble conducted a Google search using these keywords, the first page of results were invariably linked to pornography.

“I was interested in women and girls of colors’ identities and what kind of information is represented for them, about them,” said Noble, assistant professor of communication. “I collected a whole host of searches and tried to document all the ways in which a Google search has really failed the public, particularly people who are oppressed, under-represented people who live in the margin, people who don’t have access to a lot of resources.”

According to Noble, this is further compounded by a dependence upon the Internet to supplement what society is not providing, like high-quality education, access to libraries and other types traditional sources of knowledge.

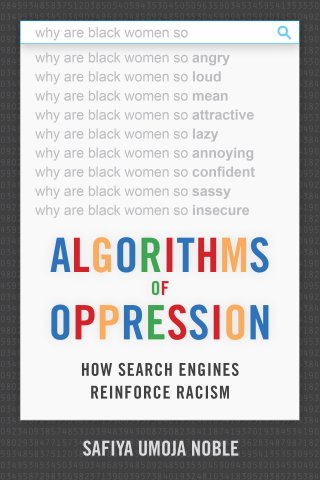

In her book, Algorithms of Oppression: How Search Engines Reinforce Racism, which was published by New York University Press this month, Noble delves into the ways search engines misrepresent a variety of people, concepts, types of information and knowledge. Her aim: to get people thinking and talking about the prominent role technology plays in shaping our lives and our future.

What I discovered through my research is that algorithms are now doing the curatorial work that human beings like librarians or teachers used to do. When I initially came up with the title, back in 2012, the word ‘algorithm’ wasn’t used the way it's used today. It wasn’t in the headlines; journalists weren't really talking about algorithms. Fast forward to 2018, and my mother-in-law is talking about algorithms. Now everybody understands the word.

Initially, I got a lot of pushback around using the phrase algorithms, that it would be too opaque or the public wouldn’t really understand. But I think now we’ve done a lot more heavy lifting about what technology companies are doing and what automated decision-making systems like algorithms are.

I hope my book puts a spotlight on how these algorithms lead to further oppression and marginalization of, primarily, women of color, but they also do a disservice to understanding complex ideas about society.

Why is this topic timely in this day and age?

When I first started interrogating Google’s output, it was incredibly unpopular. I got a lot of pushback from people in academe and people in industry who said, “Google is the peoples’ company,” and to critique them was unfair because they were doing so much more than any other company had done to make information accessible. And on one hand that’s true, particularly banal kinds of information like, “Where’s the closest drive-thru Starbucks?” That’s a really good use of Google. But when you start looking at conceptual ideas or you’re looking for knowledge, then Google starts to fail miserably.

When I began presenting evidence on the ways that Google was misrepresenting women and girls of color in particular, nobody really cared except women and girls of color. But now that Google and Facebook and other tech companies are deeply implicated, for example, in influencing a presidential election, now people really care. So, in some ways, I think that was a high price for us to pay for our awareness. But, at the same time, we have an opening now to rethink the incredible amount of power and resources that Silicon Valley companies, or tech companies around the world, have in shaping our democracy, or undermining it.

How does tech design impact and intersect race, gender and culture?

How does tech design impact and intersect race, gender and culture?

Many people think that technology is neutral, that it’s objective, that it’s just a tool, that it’s based on math or physics, and has no values, that it’s value free. That’s the dominant narrative around technology and engineering in particular. One of the things my work does is to interrogate the fundamental building blocks of technology. Computer language is, in fact, a language. And as we know in the humanities, language is subjective. Language can be interpreted in a myriad of ways.

So, as those interpretations are happening, what we see is content that favors its creators. This is typically what proliferates. And, of course, the primary creators in Silicon Valley of the technologies are white and Asian men, but mostly white men. They make the technology in their image and in their interests. They’re not thinking about women. In fact, Silicon Valley is rife with all kinds of deeply structural problems and inequalities with respect to women. And certainly of course with under-represented minorities like African Americans, Latinos and indigenous peoples, things start to fall apart.

So, I start with thinking about the technology designers and how they're designing with their values in mind, whether they know it or not. Those are the kinds of things that I’m also interrogating in the book.

You say in your book that data discrimination is a real social problem, can you explain that?

In the past, the term “redlining” typically conjured up the image of a banker sitting across from a Black or Latino family and making a decision that they can’t be financed for a home. Or they can’t get a small business loan. These are face-to-face discriminatory processes.

We now face a new era framed by what I call “technological redlining” — the way data is used to profile us. We have new models where financial institutions are looking at our social networks to make decisions about us. For example, if we have too many people who seem to be a credit risk in our social networks, then that actually impacts the decisions that get made about us.

Before, we could say, “Well, that was a racist banker.” Or, “That was a sexist banker.” And maybe we could go to court. Maybe we could file a complaint. We had different kinds of legal mechanisms. Right now we don’t have a mechanism to take the algorithm that sorted us into the wrong category to court. So we have a deepening of structural inequality that data is contributing to, and that is incredibly difficult to intervene upon. We are increasingly sorted into categories that quite frankly we can't sort ourselves out of.

What do you want people to walk away with after reading your book?

In other industries for example, we’ve seen over time a shift in public awareness about things like climate change because the research now is so accessible to us. I think we need that same kind of robust attention directed toward how the tech sector is rapidly re-shaping the quality of life for people who live on planet Earth, and why should we care about it.

I wrote this book in a way that should be accessible to the public. It’s not a book that's just for academics or that only belongs in a university classroom. What I hope is that people will share the book and talk in their families and with their friends about these phenomena. I hope my book leads people to question the prominence of these technologies in our lives, and even make choices when it comes to looking at the people they vote for, the platforms they engage with, and the ecosystem of media and technology that shapes our lives.

At the 2018 MAKERS Conference, USC Annenberg Dean Willow Bay talks with Safiya Noble, assistant professor of communication, about misinformation on the Internet and social responsibility.